It's cherry-picking time: more poorly reported science being peddled to journalists

As of the 23rd May 2022 this website is archived and will receive no further updates.

understandinguncertainty.org was produced by the Winton programme for the public understanding of risk based in the Statistical Laboratory in the University of Cambridge. The aim was to help improve the way that uncertainty and risk are discussed in society, and show how probability and statistics can be both useful and entertaining.

Many of the animations were produced using Flash and will no longer work.

Yesterday the Daily Mail trumpeted “For every hour of screen time, the risk of family life being disrupted and children having poorer emotional wellbeing may be doubled”, while the Daily Telegraph said that "for every hour each day a child spent in front of a screen, the chance of becoming depressed, anxious or being bullied rose by up to 100 per cent”. These dramatic conclusions come from a study whose abstract states –

Across associations, the likelihood of adverse outcomes in children ranged from a 1.2- to 2.0-fold increase for emotional problems and poorer family functioning for each additional hour of television viewing or e-game/computer use depending on the outcome examined.

Unfortunately this is, pure and simply, wrong. And the articles in the papers are misleading too - although note the use of ‘may be doubled’ from the Mail, and ‘up to 100 per cent’ from the Telegraph, which appears to allow them to cherry-pick their evidence as much as they want. So, leaving aside design and analysis issues such as needlessly breaking outcome scales into ‘high’ and ‘low’, what is wrong with the reporting in the paper?

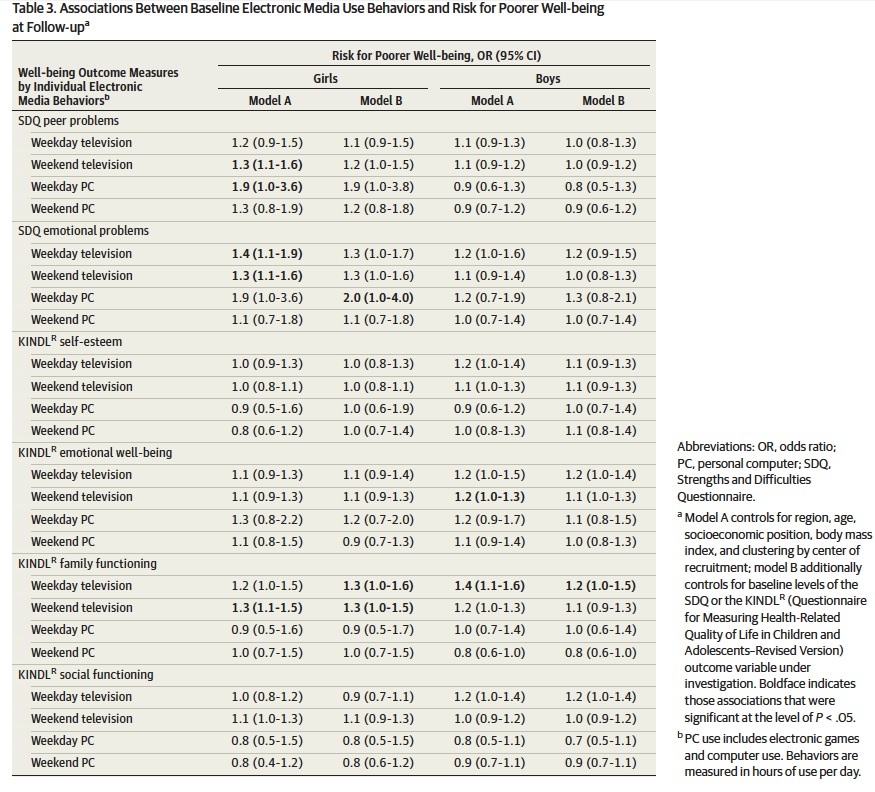

Table 3 of the paper is reproduced below. It shows 96 estimates with 95% confidence intervals. The authors focus on reporting the results that are ‘significantly high’, that is whose 95% intervals lie above 1, of which there are 11, shown in bold and scattered rather haphazardly across the Table.

But there also appear to be 2 ‘significantly low’ odds ratios, whose 95% intervals lie below 1, and 83 odds ratios which are not significantly different from 1. In fact the distribution of odds ratios forms a distribution around 1: apart from one odds ratio of 2 (which is very imprecise, with an interval from 1 to 4), they all lie between 0.7 and 1.3.

Out of 96 95%-intervals, we would expect around 5 to exclude 1 by chance alone, even if there were no effect. In fact there were 13, suggesting the possibility of a small overall effect, but nowhere near the ‘doubling’ claimed. One would also have to believe that all the many confounding factors that would simultaneously influence TV watching and later behaviour had been fully accounted for. Which is extremely doubtful. Maybe watching lots of TV when young does contribute to later problems – it seems quite plausible – but this study does not show it.

The crucial insight is that the estimated odds ratios for adverse outcomes range between 0.7 and 2, and not 1.2 and 2 as claimed by the authors in their abstract. Focusing on only the ‘significant’ positive results is, either deliberately or through ignorance, very poor and deeply misleading science. It also shows dismal refereeing. In the text the authors acknowledge that "Few associations were evident", but this does not make it through to the abstract.

Journalists would not have noticed this paper unless it had been press-released by the academic journal. So when you read about some poor science, don’t jump to blame the journalists: it could well be because of the efforts of some scientists, institutions and journals to promote coverage of their activities, regardless of their true quality and importance. Sadly, this behaviour harms scientific credibility.

Postscript

There is also a potential technical problem in that the authors interpret an odds ratio of 2 as doubling the ‘likelihood’. But if an outcome measures has a base-rate of around 50%, or odds of 1:1, an odds ratio of 2 multiplies the odds up to 2:1, or 66%. So the risk goes up from 50% to 66%, but is not doubled. In fact in the case of the 'emotional problems' scale the baseline risk is around 11%, and so an odds ratio of 2 does roughly double the risk.

- david's blog

- Log in to post comments

Comments

deevybee

Thu, 20/03/2014 - 8:11am

Permalink

Daily Mail not entirely innocent!

lshapedcomputerdesks

Mon, 06/11/2017 - 10:58am

Permalink

Thank you for sharing an